BRITISH MACHINE VISION CONFERENCE 2019 (SPOTLIGHT SUBMISSION)

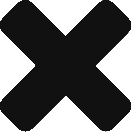

Non-line-of-sight (NLOS) imaging of objects not visible to either the camera or illumination source is a challenging task with vital applications including surveillance and robotics. Recent NLOS reconstruction advances have been achieved using time-resolved measurements which requires expensive and specialized detectors and laser sources. In contrast, we propose a data-driven approach for NLOS 3D localization and object identification requiring only a conventional camera and projector. To generalize to complex line-of-sight (LOS) scenes with non-planar surfaces and occlusions, we introduce an adaptive lighting algorithm. This algorithm, based on radiosity, identifies and illuminates scene patches in the LOS which most contribute to the NLOS light paths, and can factor in system power constraints. We achieve an average identification of 87.1% object identification for four classes of objects, and average localization of the NLOS object’s centroid with a mean-squared error (MSE) of 1.97 cm in the occluded region for real data taken from a hardware prototype. These results demonstrate the advantage of combining the physics of light transport with active illumination for data-driven NLOS imaging.

CONTRIBUTIONS OF THE WORK

Our specific contributions are

- Solving NLOS 3D localization as well as NLOS object identification using convolutional neural networks with a conventional camera and projector

- An adaptive lighting algorithm for identifying optimal LOS patches to maximize NLOS radiosity captured, and distribute illumination power among these patches given power constraints

- Robust performance for non-planar complex LOS geometries and improved NLOS localization and identification using our adaptive lighting algorithm.

WHAT IS ADAPTIVE LIGHTING?

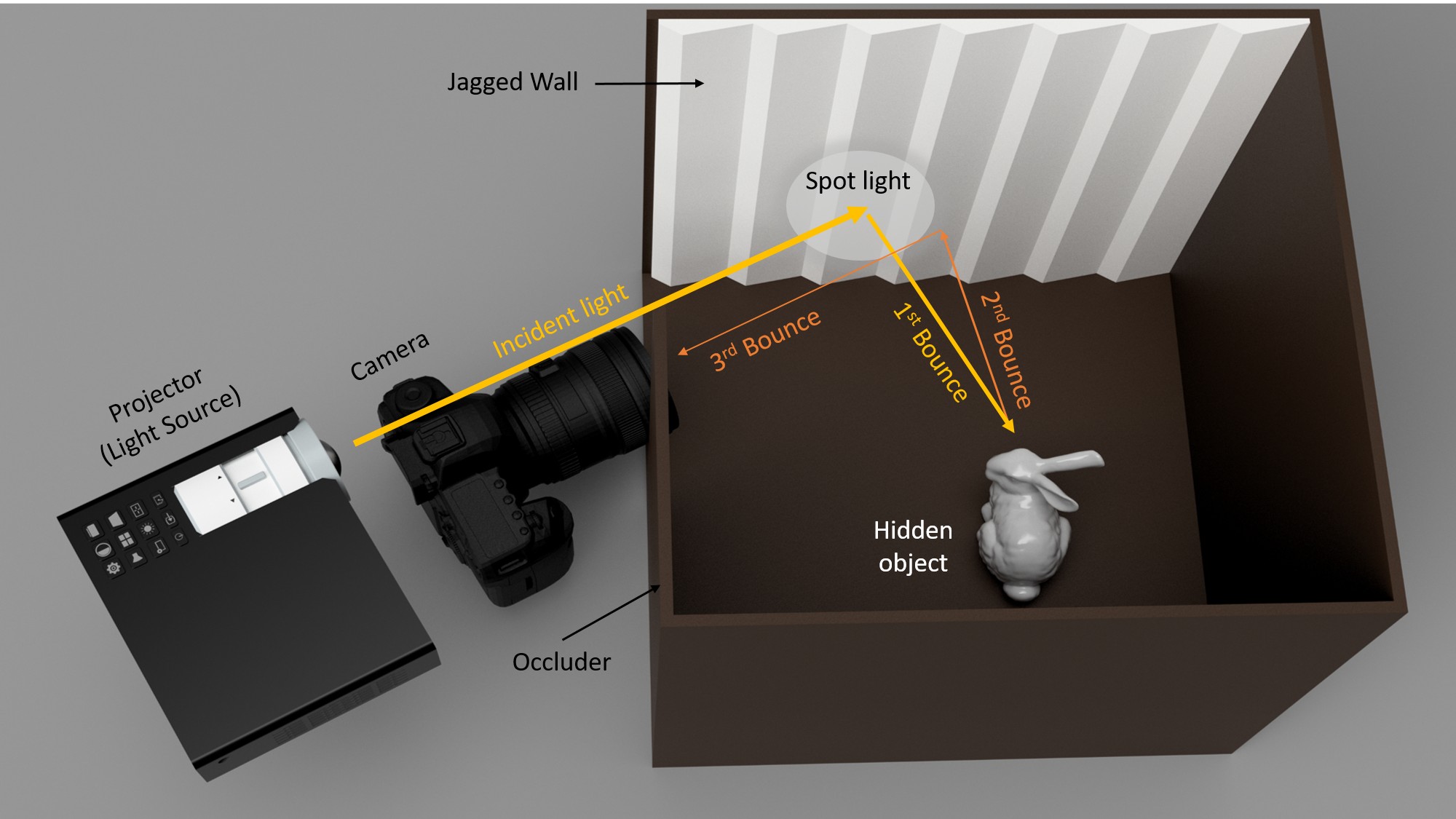

A central question we explore in this paper is what are the best lighting patterns in the LOS which maximize NLOS localization and identification? In other words, given a LOS scene with N patches, what patches maximize photons that travel along NLOS light paths? To approach this problem, we use physics-based constraints on light transport given by radiosity. Radiosity describes the transfer of radiant energy between surfaces in the scene, and is calculated based on surface geometry and reflectances/emittances. The total radiosity leaving a single patch will be its emission (i.e. if its a light source)

along with the summation of all reflected radiosity from other patches. Using the radiosity equation, we can calculate the radiosity for LOS light paths and NLOS light paths for three bounce light. If we seek to illuminate m patches out of N total LOS patches, we wish to maximize the following:

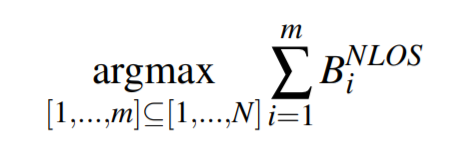

VARIOUS LIGHTING PATTERN ON A COMPLEX WALL

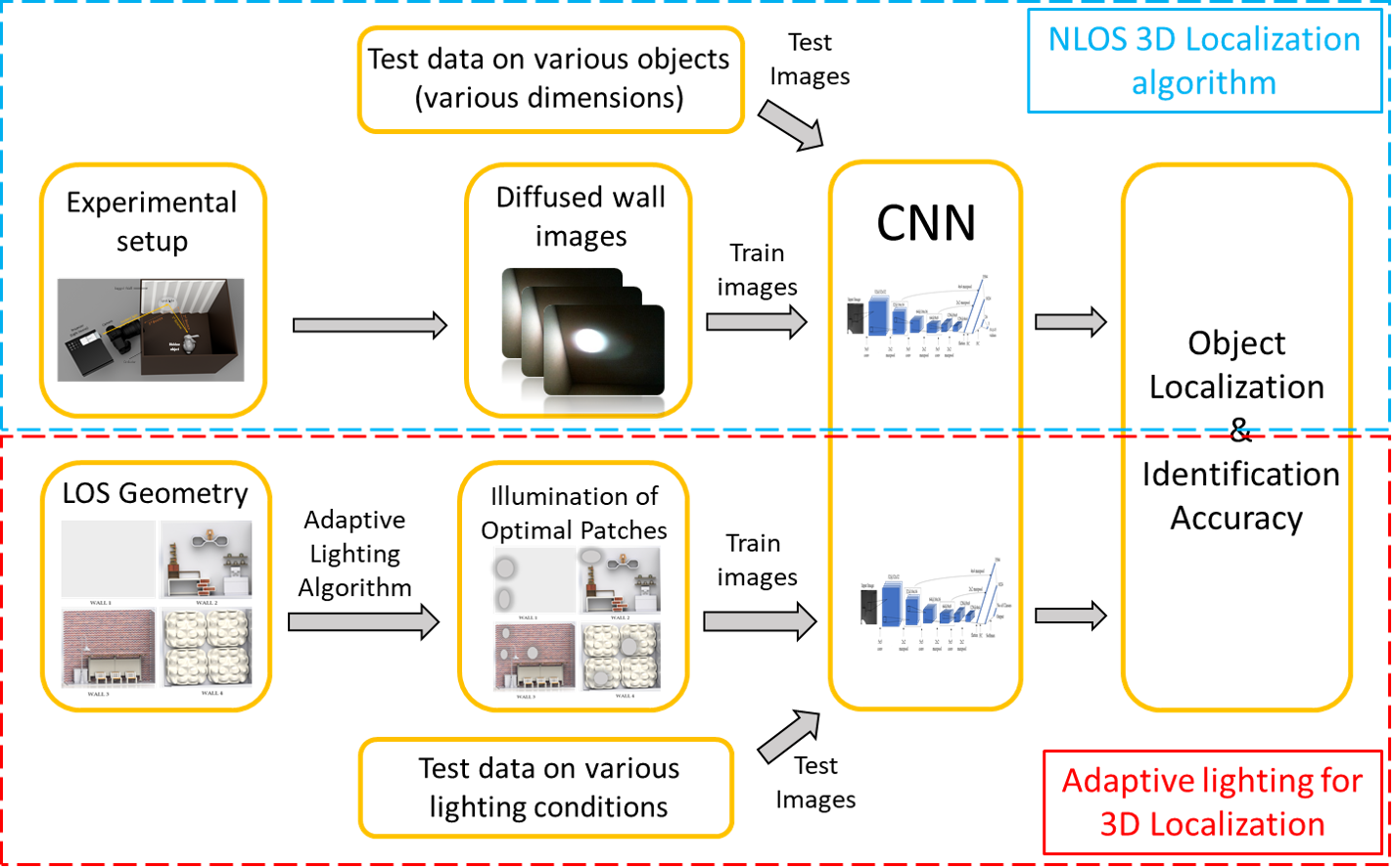

SYSTEM PIPELINE

Our research uses deep learning to perform 3D localization of an object in the non-line-of-sight (NLOS) of a camera and projector. Our complete system pipeline consists

of a CNN trained on diffuse wall images, and the adaptive lighting procedure to improveperformance. We use two different network architectures for localization and identification.

EXPERIMENTAL SETUP

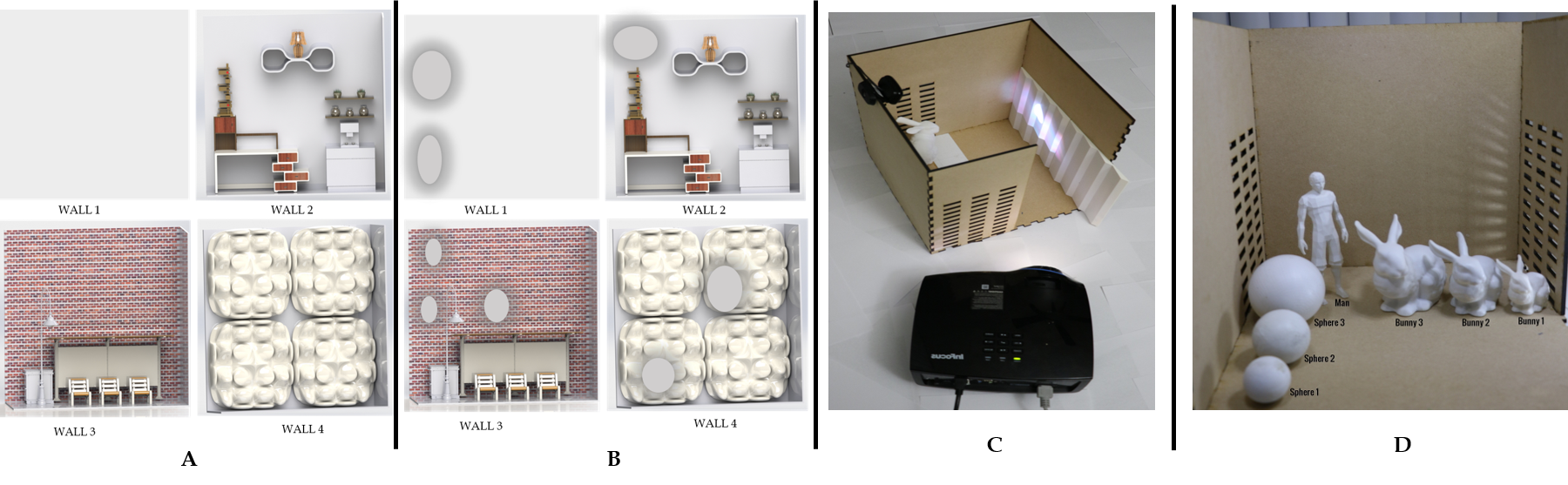

To validate the contributions, we demonstrate results using a physically-based renderer as well as a real working experimental setup in the lab.

(A)The various LOS scenes used for experiments; (B) The LOS scenes with optimal adaptive lighting patterns visualized; (C) Real experimental prototype in the lab; (D) The 3D printed objects used in real experiments.

MORE DETAILS

For an in-depth description of the technique and methods adopted, please refer to our main paper and the supplementary paper